A Uber self-driving car crashed into a woman crossing a street at a speed of 65 kilometers per hour on a street in the city of Tempe, Arizona. The woman died after being sent to the hospital. This is the world's first road accident in which a self-driving car crashed into a pedestrian. It has caused great public concern and concerns about the safety of self-driving cars. Here's a look at the relevant content along with automotive electronics Xiaobian.

Shortly after the incident, the director of the Police Department of Tambayashi told the media that this car accident is inevitable whether it is human driving or not. This may not be Uber's fault.

However, is this really an unavoidable traffic tragedy?

There are many reasons for the accident

After the accident, many media and experts analyzed and speculated about the cause of the accident.

After watching the video, U.S. netizens said that in poor light conditions, the camera’s “vision†is usually terribly bad. If you observe with the naked eye, you may notice pedestrian crossing earlier.

When Yu Kai, the founder of Horizon Robotics, interviewed the media, he speculated that it was possible that the predictive judgment of the self-driving car was not good, that there was a problem, and that the direction of the obstacle was not accurately predicted.

Zhu Jizhi, the founder of EyeQing Technologies, expressed different opinions on his Weibo. In his opinion, the problem lies in the camera. People move from the dark part to the bright part. Because of the street light, the contrast of light and shade is too great. In the shadowy area, the car did not detect it. When the pedestrian walked to the bright area, the car was monitored but it was too late.

At the same time, he believes that the night road lighting environment is too complex, the dynamic range of the car camera needs to be increased by more than 30 times, and auto-driving cars are only possible on the road.

There are also industry analysts, this Uber self-driving car has detected pedestrians, but did not take brake or parking measures, resulting in a collision incident.

Traditional imaging technology ceiling

As we all know, the visual systems used in current driverless cars are based on cameras and optical radars. Insiders have analyzed and contrasted their relevant visual performance advantages and disadvantages:

It is not difficult to draw a conclusion from the figure. There is no scheme that is flawless and infallible. For the camera based on traditional imaging technology, the influence of light conditions is very large, and in the case of strong light, low light, backlight, reflection, etc., the basic dishes are taken.

At present, the most popular laser radars are also hard to overcome. Not to mention the short-term cost that cannot be reduced quickly, except for the low resolution (of course there are now 128-line or even 300-line laser radars, but the camera resolution is not the same), the biggest problem is the inability to Recognize the color, that is, it can't represent the traffic sign.

In addition, Lidar is also affected by the environment, and even Tesla and Google admit that even the unmanned cars that are full of expensive cameras and lidars are basically abolished by half the power in heavy snow days.

Today's self-driving cars use a camera-radar solution (or laser radar + camera, or millimeter-wave radar + camera) to make up for their deficiencies. However, it is clear that such a strategy does not achieve the effect of 1+1>2. The reason is simple. It is difficult for one party to make up for the other's short board. For example, in the Uber self-driving car's death, it is obvious that the camera on the car did not detect the pedestrian crossing the road because of light problems (unless the car's brake system broke down), and the Lidar even detected a reflected signal. It cannot be judged as a pedestrian. As experts have analyzed, because of its limitations, optical radars are not designed to detect pedestrians. Because of the limited resolution of the optical radar, the refresh rate is not high and it is even more difficult to obtain the color information of the object. So it is not good at distinguishing objects in real time.

Give the autopilot a pair of eyes

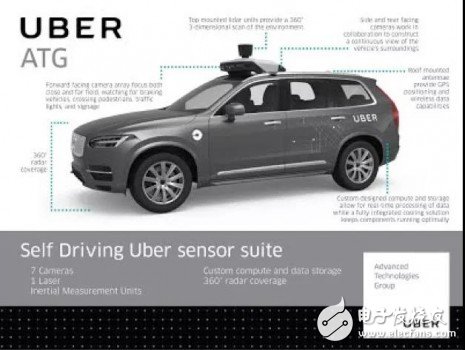

From the Uber automatic driving car sensor schematic diagram, this accident has also installed a lot of cameras on the autopilot car, and these cameras are working properly, but did not avoid the tragedy. why?

Quite simply, these cameras are not as good as the human eye.

As experts say, the traditional camera imaging technology, the machine's visual ability can not be compared with human eyesight, and even difficult to access. Because the traditional imaging technology is more focused on doing human-view image processing, the image optimization is done. In the eyes of the machine, it does not need to pay attention to the pixel height, but also does not need beauty. It needs precise measurement of the real world. It not only has high color reproduction, but also has sharp edges and high sharpness.

This means that the traditional camera can not meet the normal operation of the AI ​​machine in complex light.

For the driver assistance system, the key challenge is to ensure that the system works properly under any environmental conditions (temperature changes, sunlight, darkness, or rain and snow) and that objects other than 300 meters can be identified.

After the accident, Uber suspended the road test of self-driving cars. Toyota suspended the drive test of self-driving cars. It is said that Nvidia also suspended the road test of self-driving cars.

The light of hope

When visiting EyeQing Technology recently, the staff demonstrated to the writer the visual effects of their latest AI vision imaging engine: In a dimly lit office, only one camera was equipped with the Eye Engine AI Vision Imaging Engine solution. Under the condition of a light source, the computer display presents a bright, clear image with a high degree of color reproduction. At this time, the human eye can no longer distinguish the color and outline of the subject.

In this regard, the staff explained that what Eye Technology hopes to solve is the automatic adaptability of AI machines under complex lighting.

Relevant data shows that both 2D and 3D cameras require an image sensor with a high dynamic range of at least 130 dB (dynamic range refers to the relative ratio between the brightest and darkest parts of a multimedia hard disk player output image). Only such a high dynamic range guarantees that the sensor can get clear image information even when the sun shines directly on the lens. The normal lens system dynamic range is far below this value.

The staff explained that the eye-engineering solution is 18db higher than the human eye in the dynamic range of imaging, so that clear color images can still be output under very low-light conditions where the human eye cannot discriminate colors.

In January this year, Eyetech released the world’s first fully-developed AI vision front-end imaging chip “eyemore X42â€.

It is reported that the eyemore X42 imaging engine chip has a computing power 20 times higher than that of a traditional ISP, uses more than 20 new imaging algorithms, and integrates complex ray data over 500 different scenes.

One of the important reasons is that the eyemore X42 has abandoned the traditional ISP imaging architecture and has adopted a new imaging engine architecture to solve imaging problems under complex lighting.

In addition, the eyemore X42 chip also features rich imaging and API interfaces.

Just as human eyes have superior visual abilities, not only powerful optical imaging systems, but more importantly human eyes interact with the brain through the nerves. Based on this, Eyetech also designed an interactive architecture for the imaging engine with the back-end AI algorithm to learn the AI's need for images.

Zhu Jizhi, founder of Eye-Qing Technology, said that this interaction with the AI ​​system will make the imaging system truly become the organic organ of AI.

Write last

At the "2018 Global AI Chip Innovation Summit" at the beginning of this month, EyeQion Technology won the "AI Pioneer of the Year" award along with AI rookies such as Shangtang and Horizon, representing the industry's recognition of Vision Technologies AI vision front end imaging technology.

Indeed, from the standpoint of URP and Toyota's stopping tests, the public’s biggest concern for self-driving cars is safety. The solution to the security problem mainly depends on whether the vehicle itself can timely monitor the crisis or make timely treatment. This requires the mature development of the entire industry chain of self-driving cars, including road infrastructure and the rise of 5G networks, and the most critical one is the need for the vehicle itself to have a pair of eyes. The traditional camera imaging ceiling is already very obvious. The new generation represented by Eye Engine AI vision front-end imaging engine technology may be the hope of breaking the ceiling.

With the world’s 13 auto-driving car accidents from 2015 to date:

time

Synopsis of the incident

March 23, 2018

On the 23rd, when a Tesla 2017 Model X was driving on Highway 101 in California, it slammed into the barrier of the highway and caught fire. It was hit by two cars from the rear.

March 18, 2018

A woman in Arizona was hit by an Uber’s self-driving car on the road and was hit by a woman.

January 22, 2018

A Tesla Model S with its automatic driving mode slammed into a parked fire truck on Los Angeles Highway 405.

January 12, 2018

A Tesla Model 3 turned into a creek

January 10, 2018

In Pittsburgh, Pennsylvania, a van van lights up and hits a Ford auto-driving car

December 7, 2017

In Vancouver, a cruiser is driving a self-driving cruiser in an automatic driving mode.

November 8, 2017

A driverless bus collides with a truck in Las Vegas, USA

March 24, 2017

In Tempe, Arizona, a Uber self-driving car under test collided with an ordinary car.

September 23, 2016

In Mountain View, USA, Google’s unmanned test vehicle suffered a severe impact from a Dodge commercial truck.

May 7, 2016

A Tesla Model S collided with a vertically-oriented trailer on the Florida highway

February 14, 2016

In Mountain View, Silicon Valley, Google's "Lexus" brand modified driverless car collided with the right side of a bus during a drive test.

January 20, 2016

In the Beijing-Hong Kong-Macao high-speed Hebei section, a Tesla car with automatic driving mode directly hit a road sweeper

July 1, 2015

A Google Lexus refitted driverless car crashes on the street test in Mountain View, California

The material of this product is PC+ABS. All condition of our product is 100% brand new. OEM and ODM are avaliable of our products for your need. We also can produce the goods according to your specific requirement.

Our products built with input/output overvoltage protection, input/output overcurrent protection, over temperature protection, over power protection and short circuit protection. You can send more details of this product, so that we can offer best service to you!

Led Adapter,Mini Led Adapter,Security Led Adapter,Waterproof Led Adapter

Shenzhen Waweis Technology Co., Ltd. , https://www.laptopsasdapter.com