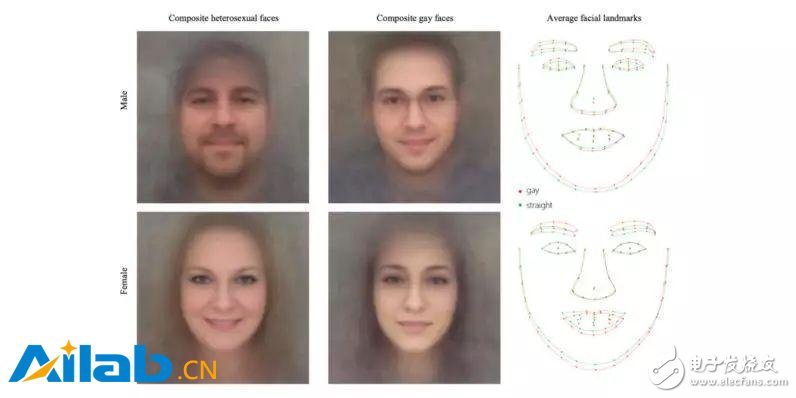

Recently, a study from Stanford University claimed that their algorithm can infer the sexual orientation of a character by analyzing the expressions, movements and expressions of the characters in the photo. But critics believe that this is just a pseudo-science and face-to-face cloak with artificial intelligence. These backward concepts, which have long been abandoned by the Western scientific world, are now re-emerging under the cover of new technology. The narrow Stanford study of the study gives a seemingly high degree of judgment accuracy: when artificial intelligence analyzes a single photo, it guesses that the male sexual orientation in the picture is 81%, while the female is 71%. .

When the number of photos of each analyzed person was increased to 5, the algorithm analyzed the correct rate of sexual orientation of males and females as high as 91% and 83%, respectively. But the problem is that when the researchers are testing, they are tested in parallel in the form of a group of two. Each group of characters has a "straight" and a "bend", so even if the artificial intelligence is only Yimeng, there is a 50% accuracy rate. .

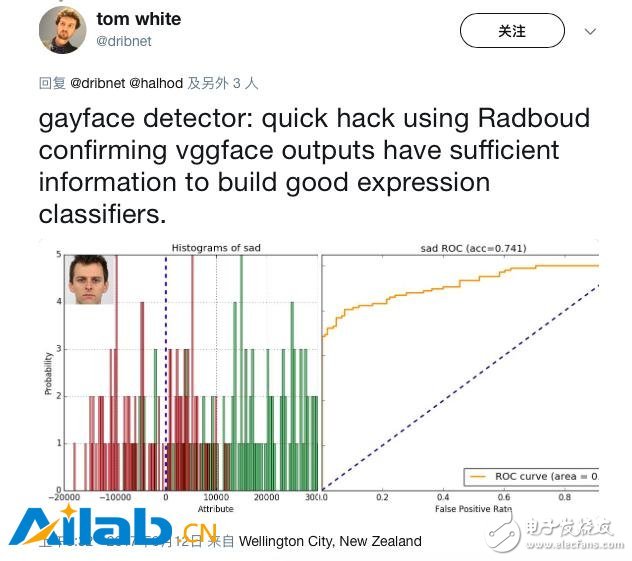

Moreover, the researchers claim that the analysis of the algorithm only focuses on the five-bureau study, and the VGG-Face software minimizes the interference of liquidity factors such as lighting, posture and expression. But Tom White, a researcher in the direction of artificial intelligence facial recognition, said that VGG-Face is also very capable of capturing those fluid elements.

This basic data, which is being studied, is itself biased.

Greggor Mattson, a sociology professor at the University of Oberlin in the United States, points out that because these photos are taken from dating sites, it means that the photos themselves are specifically selected by users to attract specific sexual orientation. That is to say, these photos are all interpreted by the users to cater to the position of the society they think of the sexual orientation, in other words, stereotypes. Although the research team has also tested the algorithms for materials other than research, the materials themselves are biased. After all, not all gay men will praise the pages of “I am proud of meâ€, and people who are a bit like may also tend to cater to specific stereotypes. What they ignore is the myriad of irregular atypical behaviors contained in this group.

Kosinski, the head of the study, also said that his research may be wrong and said that "to verify the correctness of the results, we have to do more research." But how can we determine that research is not biased? In response, Kosinski's response is:

To test and verify the correctness of a result, you don't need to understand how the model works. And The Verge believes that it is the opacity of such research that makes these studies misleading. Artificial intelligence is also a new tool for human beings to create with prejudice

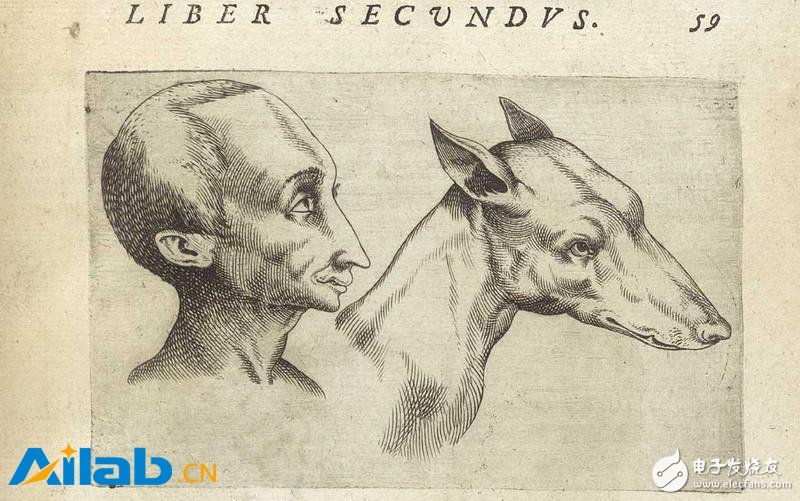

On the long river of history, there have been many examples of humans casting their own prejudices into the best tools of the time. The concept of linking human appearance to human personality and essence has existed since the ancient Greek period, and in the 19th century, it was given the illusion of "science" by tools. At that time, the researchers of facial science believed that the angle formed by the human forehead, or the shape of the human nose, could be used as an example of judging whether a person is honest or has a criminal tendency. Although these claims have long been judged as pseudoscience, they are now “reborn†in the era of artificial intelligence.

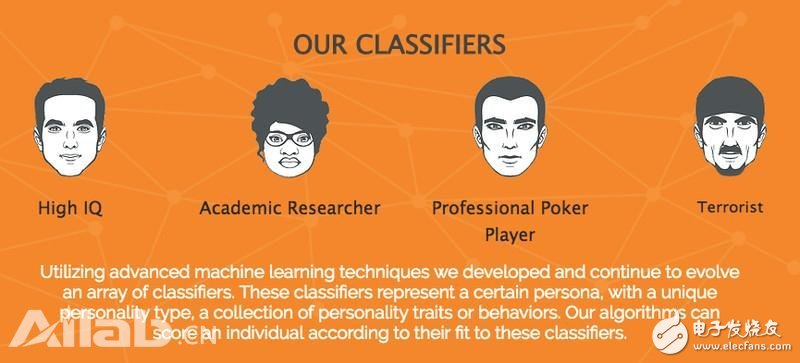

Last year, the research team from Shanghai Jiaotong University also claimed to have developed a neural network system to identify criminals, which led to the anger of the artificial intelligence community. Three researchers from Google wrote a long-term criticism of these researchers. A startup called FacepTIon also claims to be able to identify terrorists through face analysis.

But can artificial intelligence really analyze and study this data objectively? Jenny Davis, a sociology lecturer at the Australian National University, does not agree:

Artificial intelligence is not really purely "artificial." Machine learning is done in the same way that humans learn. We extract and absorb the normality of social structure from culture, and artificial intelligence does the same. Therefore, it (artificial intelligence) will rebuild, expand and continue the path that we humans have set for them, and these roads will always reflect the existing social norms. Whether it is based on the face to judge whether a person is honest or to judge his sexual orientation, these algorithms are based on the biological essenTIalism, which is a kind of conviction that the nature of human sexuality is rooted in The theory of the human body. Davis also pointed out that these are “useful†theories because they can extract certain traits from a specific group of people and define them as “secondary and inferior†to discriminate against otherwise biased people. "just cause".

At the beginning of this year, computer scientists from the University of Bath and Princeton used a Lenovo-like test similar to IAT (Implicit Association Test) to detect the potential bias of the algorithm and found that even the algorithm would be biased against race and gender. Even Google's translation is hard to escape, and the algorithm "discovers" and "learns" the prejudice of social conventions. When in a particular locale, some of the original neutral terms, if the context has a specific adjective (neutral), it translates the neutral word into "he" or "her".

Having said that, perhaps you can already see that the accuracy of the Kosinski and Wang research (Stanford research) is not the most important. If someone wants to believe that artificial intelligence can judge gender orientation, they will use it regardless of accuracy. Therefore, more importantly, we need to understand the limitations of artificial intelligence and neutralize it before it causes harm. The Verge commented. But the most troublesome thing is that most of the time, we can't detect the prejudice of our own existence. Then how can we expect the tools we make to be absolutely fair?

A manual pulse generator (MPG) is a device normally associated with computer numerically controlled machinery or other devices involved in positioning. It usually consists of a rotating knob that generates electrical pulses that are sent to an equipment controller. The controller will then move the piece of equipment a predetermined distance for each pulse.

The CNC handheld controller MPG Pendant with x1, x10, x100 selectable. It is equipped with our popular machined MPG unit, 4,5,6 axis and scale selector, emergency stop and reset button.

Manual Pulse Generator,Handwheel MPG CNC,Electric Pulse Generator,Signal Pulse Generator

Jilin Lander Intelligent Technology Co., Ltd , https://www.landerintelligent.com