We all know that artificial intelligence is not a hit. After years of development and precipitation, we can see that artificial intelligence is now denying the outbreak. From the neural network to the DNN, artificial intelligence has finally recovered from the cold winter to the revival.

The first AI winter was around 1975. In 1956, after the Dartmouth meeting, including many national governments, the National Science Foundation, and the military, everyone invested a lot of money with hope. But after 1975, a few things happened, let AI enter the winter.

The first thing is because AI can only solve the Toy domain (a simple task like a toy). At that time, there were only 10 words for making speech; for chess, it was about 20 words; for those who were visual, they could not recognize a chair. The second thing, the 1956 American war, and the oil crisis, so the economy is not so good; there is also a famous British scholar Lighthill, saying that AI is wasting money, and AI's research funding has been greatly reduced. (Editor's Note: The report “Artificial Intelligence: A General Survey†published in 1973, commonly known as the Lighthill report, states that “there are no parts of the field that have so far produced a significant impact as previously promised.†The government subsequently stopped funding for AI research at three universities, Edinburgh, Sussex and Essex).

Beginning in 1980, some companies such as IBM began to do some expert systems, which can be said to be limited applications. Despite some shortcomings, there are still things that can be done. It is said that there are billions of outputs. Therefore, AI will begin to rejuvenate. I also started to enter AI at this time, so I am very lucky.

I went to CMU (Carnegie Mellon University) in the 1980s. I remember that Japan was very rich at that time, and it was everywhere in the United States to buy buildings and build laboratories. So at that time, Japan proposed a fifth-generation computer system plan (FtCS). At the time, there were companies specializing in Lisp Machines (a general purpose computer designed to efficiently run the Lisp programming language through hardware support). It's a bit like today's DNA red, everyone is doing DNA chips, then everyone is doing Lisp Machines, Thinking (ConnecTIon) Machines, and then the neural network is just beginning to sprout.

However, by the middle of 1990, AI had been cold for the second time. Why is this so? Because the fifth-generation plan failed, Lisp Machines and Thinking (ConnecTIon) Machines could not do it; while the neural network, although interesting, did not do better than some other statistical methods, but used more resources, so everyone I felt that there was no hope, so AI entered the second winter.

The emergence of the statistical path in the 1990sAlmost at this time in winter, the statistical method, the method of using data appeared.

AI was done by the so-called research of the human brain before 1990; and we have too many reasons to believe that the human brain is not relying on big data. For example, to see a dog and a cat for a child, you can identify it after reading a few. Today's method can be used to show hundreds of thousands of pictures of millions of dogs and cats to a computer to identify whether it is a dog or a cat. With big data, it started to sprout between the first AI winter and the second AI winter. Although AI was created by a group of computer scientists, there is actually a pattern recognition that is extremely relevant to AI. Pattern recognition has always been done by engineers, and statisticians have been doing pattern recognition since the 1940s.

Our generation of people learns computers and knows two people, one is called Fu Jingsun (KS Fu) and the other is Julius T. Tou. If AI chooses a 60-person Hall of Fame, there will be a person named Fu Jingsun, which is a big cow. Fu Jingsun strictly speaking that he is not AI, but can be included because he also does pattern recognition. There are also two factions in pattern recognition, one is called StaTIstical Pattern Recognition, and the other is called Syntactic Pattern Recognition. In the 1980s, the syntax was very red, and no one was interested in the statisticians. Later, after 1990, everyone used statistics.

The people we made the speech were very clear, and later introduced the Hidden Markov Model, which is a statistical method, which is still very useful today. Especially on Wall Street, financial investment, stock making, many are time series data, and the hidden Markov model is very powerful. It can even be said that the statistical method is the person we are making speech (developed). And as early as 1980, we made the speech "There is no data like more data". From the current point of view, this is very forward-looking and is the concept of big data. The amount of data in our time cannot be compared to the present, but we have already seen the importance of the data. And IBM is amazing in this regard. One of their voice managers has said that every time we double the data, the accuracy rate will rise; every time we fire a linguist, the accuracy rate will also go up.

The decision tree is also the first to be used by speech researchers. Then there is the Bayesian Network, which was so red a few years ago. Of course, it is now a deep neural network (DNN), an artificial neural network with multiple hidden layers between input and output. ). Why should I mention these things? Today, I think that many people attend AI classes. Maybe 75% and 80% will talk about DNN. In fact, AI still has other things.

It is also very difficult to teach AI today. I also looked at the recent AI textbooks, like Wu Enda and others. They teach AI in academia, and they teach these things, but if they go to the general or most companies, they are all talking about DNN. I don't think I can find a good AI textbook right now, because the early book statistics didn't talk about it, or didn't talk about DNN. I also looked at Stuart J. Russell of the University of California at Berkeley and Peter Norvig's Textbook (Artificial Intelligence: A Modern Approach), where DNN mentions a bit. It may not be easy to write AI now, because AI has so many things, people say it is useless, unlike DNN, it is really useful.

I will explain a little bit about the difference between DNN and general statistical methods. The statistical method must have a model, but the model must have assumptions. And most of your assumptions are wrong, you can only approach this model. When the data is not enough, there must be a certain distribution. When the data is enough, the benefit of DNN is that it depends entirely on the data (and it can), and of course it requires a lot of computation. So DNN does have its advantages. In the past, we used statistical methods to do the feature extraction. We used a lot of methods to make a simple knowledge representation. Now we don't need to use DNN to extract features. We only use the original data to solve it. So the reason why AI is not easy to talk about now is that DNN is less correct. If you talk too much, it is true that all DNNs have problems.

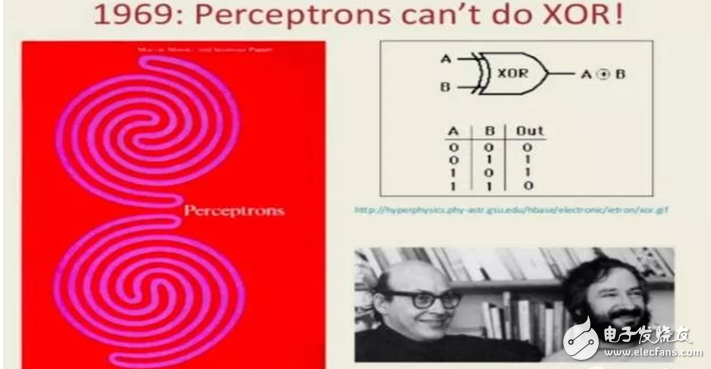

Neural network fluctuationThe earliest neural network was called Perceptron, which was related to the first winter. Because the first sensor did not have a hidden layer and no activation function, Marvin Minsky and Seymour Papert wrote a book, Perceptron, saying that the sensor is different or (XOR) can't do it. So, what is the use of the sensor? So basically the progress of the first generation of the entire neural network was killed.

â–ºThe perceptron can't do even the simplest logical operation “XORâ€, which causes the AI's winter to some extent.

In fact, people later found misunderstandings. In fact, the book did not say so strong, but it did have a great impact. Until 1980, people who did cognitive psychology, such as Rumelhart and Hinton, revived AI.

Hinton was doing cognitive psychology early on. Hinton first went to UCSB (University of California, Santa Barbara) and later to CMU. Rumelhart, Hinton and McClelland revived the multi-layered perceptron, adding hidden layers and back-propagation algorithms, when the neural network revived. Moreover, as long as the neural network is added with an implicit layer, in fact, as long as a layer is added, plus an activation function, it can be simulated, and even some people can prove that any function can be simulated, so the neural network suddenly becomes red. The Convolutional Neural Network (CNN) began to come out at that time, followed by the Recurrent Neural Network (RNN). Because if you want to deal with past history, there is storage, you need to backtrack. Time-Delayed NN (TDNN) for speech and natural language processing is also available.

However, there was not enough data at the time. If the data is not enough, it is easy to be partial. The second factor is that the resources are not enough, so the hidden layer can't be added too much. In this way, although the neural network is very interesting, it can solve the problem, but there are simpler statistical methods, such as Support Vector Machine (SVM), which can be the same or slightly better. So in the 1990s, there was the second winter of AI, and it was not until the emergence of DNN.

AI recoveryThe recovery of AI may start from 1997. In 1997, Deep Blue defeated chess champion Garry Kasparov. Here I want to mention a person named Xu Fengxiong. He was at CMU doing a project called "deep thought" at the time, basically the architecture was there. As a result, IBM is very smart. They visited CMU and saw the group of Xu Fengxiong. Then did not spend much, up to two million, bought this group, let these people go to IBM to do things. IBM saw at the time that it would be able to defeat the world champion within five years. In fact, the real contribution was made at CMU. Xu Fengxiong later left IBM, joined us and has been retiring. The AI ​​recovery is actually just beginning. Some people say that this did not help the AI ​​recovery, because Deep Blue can defeat the champion of chess, nor is the algorithm particularly remarkable, but because they can make a special chip can be very fast. Of course, AlphGo is also very fast, and it is always very important to count quickly.

In 2011, IBM made a question answering machine called Watson, defeating the champion of the Jeopardy game. Jeopardy is actually a very boring game. There is a game like memory: ask a common sense question and give four options. In fact, Watson is not a big deal to defeat people.

By 2012, the recovery of AI is already very obvious. Machine learning and big data mining have become mainstream, and almost all research is used, although not yet called AI. In fact, for a long time, including our voice and images, we don’t talk about AI. Because the name AI became a bit of a bad reputation at the time. When people talk about AI, it doesn't work. When the second AI winter, as long as I heard that someone is doing AI, I think he can't do it. In fact, machine learning is a part of AI.

â–ºFrom left to right: Yann LeCun, Geoff Hinton, Yoshua Bengio, Wu Enda

Now back to deep learning, three characters have contributed a lot to deep learning. First place, Hinton. This person is very amazing. The great thing is that when no one cares about the neural network, he is still doing this thing tirelessly. The second person to do CNN is Yann LeCun. He also worked as a CNN for a lifetime, and continued to do it during AI winter, so many CNNs today should come from Yann LeCun. The other one is called Yoshua Bengio. Therefore, there are still people in China's top ten AI leaders, I think it is very funny. I think who is talking about AI outside, or a company, they are two things with scientists, scientists are still doing when others think that winter.

So today I talked about DNN, talking about AI, no tree planting of the predecessors, there is no cool for future generations. This 61-year development, these hard-working people, you need to remember these people. Today, people who talk about AI on the stage are all people who have achieved results. I think that I have contributed to AI. I think it is too much.

There is another one related to AI. Everyone remembers that Xbox had a name called Kinect a few years ago. You can use this thing when playing games. I think this is the first mainstream motion and voice-aware device released. Of course, there will be Apple's Siri in 2011, Google's voice recognition products in 2012, and Microsoft's 2013 products, all of which are AI's recovery. Until 2016, AlphaGo defeated Li Shishi and defeated Ke Jie. The AI ​​was completely recovered.

Today's AIDNN, DNN or DNN.

I didn't mean to downplay the importance of DNN, but if DNN represents all the wisdom, it is overstated. DNN is absolutely very useful, such as machine vision, there will be CNN; natural language or voice, there are RNN, Long Short-Term Memory (LSTM). There is a collection of imageNet imageNet inside Computer Vision. We are honored that almost two years ago, Microsoft identified objects on the collection as good as people, even more than people.

The same is true for voice. Microsoft almost surpassed humans in any one task on Switchboard almost a year ago. Machine translation I believe that everyone is used, it may be used every day. Even things that seem to be creative have appeared, such as Xiao Bing can write poetry. I also saw a lot of pictures drawn by computers, and the music made by computers seemed to be as creative as they were.

However, although AI is very red, machine learning, big data has been heard by everyone, especially those who do learning have also heard big data mining, then how big is the difference between the three? I often say that these three things are not exactly the same, but today the reproducibility of these three may exceed 90%. So in the end is AI red, or big data red? Or machine learning red? I think so important?

High efficiency waterproof Power Supply is special used for outdoor .It designs for constant voltage and fully sealed internal glue with all-aluminum housing, waterproof level meets IP67, IP68 standards.

Waterproof led driver are widely used in LED, communications security, electric power, aerospace, industrial control systems, postal communications, vehicle, monitoring systems, railway signals, medical equipment instrumentation, display automation, limited television and other fields, with high efficiency, low consumption, energy saving, environmental protection, high stability, low ripple and conforms to the certification and electromagnetic compatibility requirements. All products undergo high, low temperature and rain test , 100% full load aging and a full range of functions and protection test strictly.

Waterproof Power Supply,12V Waterproof Power Supply,Waterproof LED Power Supply,Waterproof DC Power Supply

Shenzhen Yidashun Technology Co., Ltd. , https://www.ydsadapter.com